In [1]:

from lec_utils import *

Discussion Slides: Clustering

Agenda 📆¶

- Unsupervised learning.

- Minimizing inertia.

- $k$-means clustering.

Unsupervised learning¶

- Suppose you're given a dataset where each point has several features $X$, but no labels $y$.

- Labels are an essential ingredient to the supervised algorithms we've learned about like linear regression, which learns a hypothesis function to predict a target $y$ given features $X$.

So we can't run supervised learning. What can we do?

- One idea: Find groups of points in our dataset which are similar to one another.

These groups are called clusters.

Minimizing inertia¶

- There are several ways to define clusters.

One way is to define a set of $k$ centroids, or cluster centers. Each point belongs to the centroid it is closest to.

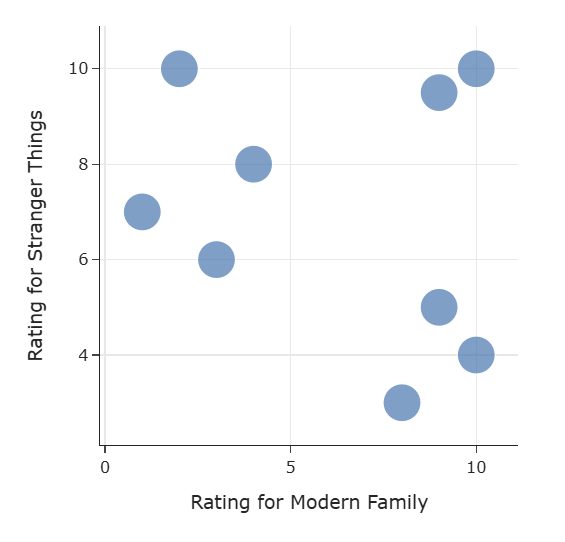

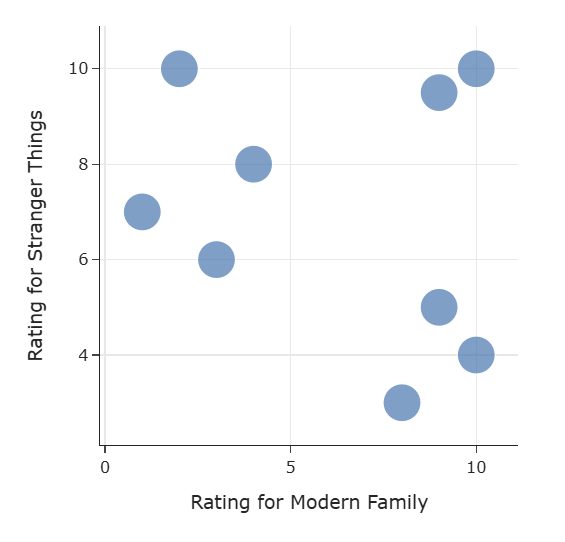

Once we've put users into clusters, we can make better recommendations based on the preferences of other users in the same cluster.

- How do we place our centroids optimally?

- Inertia is an objective function we can use to measure how well a dataset was clustered. It's calculated by measuring the ($L_2$) distance between each data point and its closest centroid, squaring this distance, and summing this value across all points.

- We want to find the centroids $\vec \mu_1, \vec \mu_2, ..., \vec \mu_k$ that minimize inertia, but there are $k^n$ possible assignments of points to clusters. It would be computationally infeasible to try them all.

Instead, we'll use an iterative algorithm to (try to) find the centroid locations that minimize inertia!

$k$-means clustering¶

- $k$-means is one of the most popular clustering algorithms, and is designed to minimize inertia.

- $k$-means finds the best centroids by initializing $k$ centroids randomly, and then alternating between:

- Assigning each point to the nearest centroid.

- Moving each centroid to the center of its group.

- Let's try a couple runs of $k$-means clustering here.